Image-to-Image Translation using GANs

Overview:

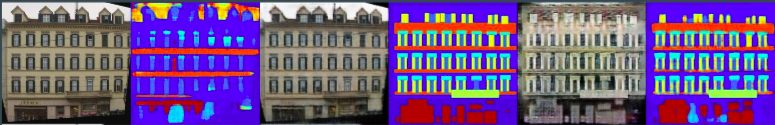

I was first introduced to the idea of using cycle consistency for image-transformation tasks while going through the CycleGAN paper. Before, however, the research problem with transfer of images had been investigated but it appeared very interesting to take a cycle consistency measure to ensure a sense of regularisation and something I could reason with, given that I worked with Capsule Networks on a similar idea. The paper stressed the relaxation of the requirement for the success of paired inputs throughout domains, thus enabling an image to be translated from Domain A to domain B even without a direct correspondence for the image of Domain A in Domain B and vice versa.

I soon came across another paper working on a similar idea in the DualGAN paper. This paper instead of using cycle-consistency measures, enforced similar conditions by leveraging the primal-dual relationships(think dual(dual) == primal; duality theorems from Mathematical Optimization theory for LPs). On closer inspection, it is clear that the two papers mentioned above (DualGAN, CycleGAN) work on the same underlying principles, DualGAN employs the primal-dual relation, whereas CycleGAN employs the cyclic mapping; the former employs a reconstruction loss, whereas the latter employs a cycle-consistency loss.

Note that, in both the papers:

- reconstruction loss/cycle-consistency loss is what prevents mode collapse of the GAN

- final loss metric is a two-part metric; one that captures the style(adversarial loss) and one that preserves the content(cycle-consistency/reconstruction loss) and it relies on the empirical assumption that content is easier to preserve and style is easier to change

Later, I applied CycleGANs to image enhancement problems such as rain removal, cloud removal, and dehazing. I was able to improve the quality of generated images by increasing the number of training cycles for the generators for each discriminator update; this was influenced by observing that the discriminator loss dropped sharply when only 1 cycle was used. I was able to achieve improvements in training stability by using the Wasserstein distance along with gradient penalty(instead of weight clipping). I’m currently working on a generalizable image enhancement solution using CycleGAN as a base architecture, stay tuned for progress in this regard!

Technical Details

- Language: Python

- Framework: Tensorflow1(DualGAN), PyTorch(CycleGAN)